CRSM Regression

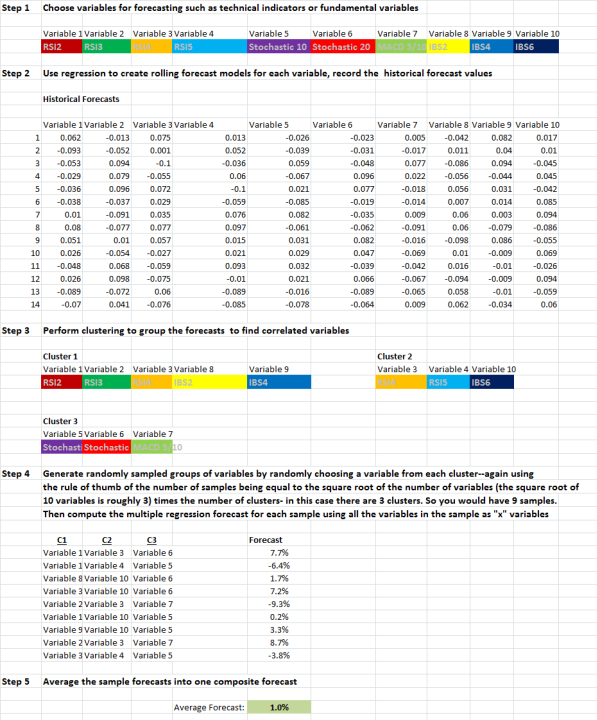

In the last post, I presented a schematic of how Cluster Random Subspace (CRSM) would work for portfolio optimization. But the concept can be extended to prediction and classification. Obviously “Random Forests” could incorporate this concept to build superior decision trees with fewer samples–but I would caution that decision trees tend to have poor performance for prediction of financial time series (this is due to binary thresholding and over-fitting). However, standard multiple regression has been a workhorse in finance simply because it is more robust and less prone to over-fitting than other machine-learning approaches. One of the challenges in regression is that it breaks down when you have a lot of variables to choose from and some of them are highly correlated. There are many established ways for dealing with this problem (PCA, stepwise regression etc), but CRSM remains an excellent candidate since it can be used to form a robust ensemble forecast from a large group of predictors. It does not address the initial choice of variables, but at least it can automatically handle a large group of candidates that may contain some highly correlated variables. CRSM Regression is a good way to proceed when you have a lot of possible indicators, but don’t have any pre-conceived ideas for constructing a good model. Here is a process diagram for one of many possible methods to apply CRSM Regression:

Step 5. is really big 😉

One thing I’d note is that the one thing random forests DON’T do is overfit, given enough trees. One random decision tree definitely can overfit. Several hundred of them? Not so much.

ilya, decision trees can overfit– i wasn’t specifically referring to random forests. decision trees can overfit because they can split numerous times and can be highly non-linear with respect to different variables. many of these splits can be useless for out of sample performance. one has a choice between pruning a tree/cross validation etc or simply bagging an unpruned tree like in random forests to get something reasonable. in the case where there is an option between random forests and CRSM type random forests, the latter will produce more diversification and probably a more robust/superior result.

best

david

It’s a neat paper, and I think that clustering before taking a random subsample makes a lot of sense in this application since investments in the same asset class are highly correlated. On the other hand, I’ve found that putting layers of formal mathematics on top of a set of false assumptions rarely adds value.

As soon as you assume an investment will have constant variance (or even worse, that the historical variance will persist into the future) your model is fiction at best. A lot of mean-variance optimizations also assume returns are normally distributed which is a very dangerous game.

I’ve been seeing increasingly complex models (option surfaces to predict the distribution of returns, factor models to predict correlations) but even there you are building on a foundation of assumptions known to be false (especially within the option pricing models).

ryan, you are correct the purpose of the paper was to highlight the random subsampling using clusters. in terms of mean-variance, that was just an example, in practice it could be any allocation method. as for the strength of your comments–i agree investments don’t have constant variance, but is predicted variance better than historical (especially at shorter lags?) is no prediction of variance better than a reasonable prediction of variance? what would be the alternative if you can’t rely on momentum, variance, or correlations? does that mean you think nothing is predictable or possible? if so are you recommending buy and hold? im being unbiased here–i think it is easy to point out the flaws in everything but at the end of the day if we choose to be proactive (vs passive), a quant will have to pick the most realistic/practical approach that may still have some flaws but is still better than making no assumptions. All models are flawed–its just a matter of degree is my point.

best

david