Combining Acceleration and Volatility into a Non-Linear Filter (NLV)

The last two posts presented a novel way of incorporating acceleration as an alternative measure risk. The preliminary results and also intuition demonstrate that it deserves consideration as another piece of information that can be used to forecast risk. While I posed the question as to whether acceleration was a “better” indicator than volatility,the more useful question should be whether we can combine the two into perhaps a better indicator than either in isolation. Traditional volatility is obviously more widely used, and is critical for solving traditional portfolio optimization. Therefore, it is a logical choice as a baseline indicator.

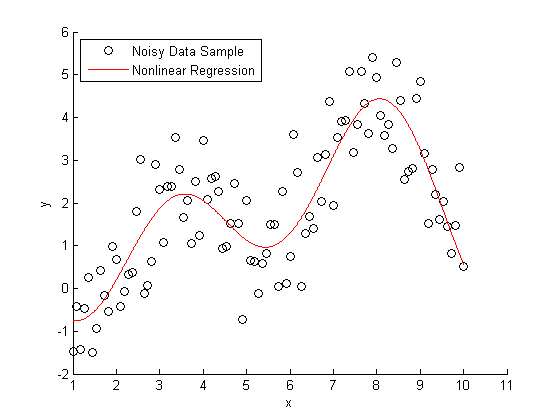

Linear filters such as moving averages and regression generate output that is a linear function of input. Non-linear filters in contrast generate output that is non-linear with respect to input. An example of non-linear filter would be polynomial regression, or even the humble median. The goal of non-linear filters is to create a superior means of weighting data to create more accurate output. By using a non-linear filter it is possible to substantially reduce lag and increase responsiveness. Since volatility is highly predictable, it stands to reason that we would like to reduce lag and increase responsiveness as much as possible to generate superior results.

So how would we create a volatility measure that incorporates acceleration? The answer is that we need to dynamically weight each squared deviation from the average as a function of the magnitude of acceleration- where greater absolute acceleration should generate an exponentially higher weighting on each data point. Here is how it is calculated for a 10-day NLV. (Don’t panic, I will post a spreadsheet in the next post):

A) Calculate the rolling series of the square of the daily log returns minus their average return

B) Calculate the rolling series of the absolute value of the first difference in log returns (acceleration/error)

C) Take the current day’s value using B and divide it by some optional average such as 20-days to get a relative value for acceleration

D) Raise C to the exponent of 3 or some constant of your choosing- this is the rolling series of relative acceleration constants

E) Weight each daily value in A by the current day’s D value divided by the sum of D values over the last 10 days-

F) Divide the calculation found in E by the sum of the weighting constants (sum of D values divided by their sum)– this is the NLV and is analagous to the computation of a traditional weighted moving average

And now for the punchline–here are the results versus the different alternative measures presented in the last post:

The concept shows promise as a hybrid measure of volatility that incorporates acceleration. The general calculation can be applied many different ways but this method is fairly intuitive and generic. In the next post I will show how to make this non-linear filter even more responsive to changes in volatility.

How do you know your progressively better results are not due to data mining?

In my experience, methods that can be tweaked (pretty much all of them) are incredibly difficult to meaningfully backtest, especially if you are optimizing parameters using the data you are testing against. Something as simple as trying three or four values of k and picking the best performing one invalidates your results due to look-ahead bias.

In these situations I prefer running a sensitivity analysis (for example K against the realized sharpe ratio) to get a feel for how critical the parameter values are for model performance. If you get consistent outperformance for many values, the model is probably robust. If you need to hit the perfect value to get performance, you are just gambling.

An alternative method is to numerically optimize for K in rolling periods of data to see if the “best” parameter changes over time or stays constant.

A more correct approach would be to separate your data into a test and evaluation set, but I find that its painful to remove several years of data from the analysis, and as soon as you use the evaluation set a couple of times the same data-mining problems reappear.

The crux of the issue is that a backtest can only say how you might have performed in the past. What you really want to know the probability of outperformance in future markets.

Ryan,

First off, all numbers for k seem to do well and progressively better up to a point for higher values. Not that this is validation by itself but obviously if the results jumped around randomly as a function of k it would be of concern. The same is true for the average for the relative acceleration–this parameter is not sensitive-different lookbacks produce virtually the same results. In either case the “concept” of combining volatility and acceleration into one measure makes logical sense (data points with the highest weight will be those with a high deviation from the mean preceeded by strong acceleration)

Im going to take your remarks as assuming I am not familiar with such testing issues? and by the way regarding your final remark, if you do happen to have a crystal ball to tell what the probability of outperformance in future markets I would pay you handsomely to license it. I understand in/out of sample, data mining bias and double blind testing etc, You can read about some of my comments touching on these issues in prior posts. No matter how good your methodology is there is still always a chance that you have found something that does not really exist. Minimizing this bias is obviously important for serious research. The point is not about testing methodology–but rather to introduce ideas – all of which can be thoroughly tested by readers on their own. Some of these ideas are fresh off the whiteboard, others have been tested extensively. This is a blog versus an academic journal, and the main purpose is to inspire new avenues/concepts for research. Thinking openly without restraints and doing limited testing is a good way to evaluate whether to invest time to seriously research a topic- it also permits superior creativity. Yes you burn some data, but in my experience it is still valuable.

I would add that most seemingly official and serious journal articles are technically data-mined despite their methodology since few authors report an idea that they had that didn’t work in testing or the number of concepts tested to find good results.Furthermore, there is too much of a tendency to build upon prior research which compounds this problem. As a consequence I am less impressed overall by their “robustness checks” and methodology (since that is my own responsibility in the background- and everyone else who does research), and more impressed by new ways of thinking about things- whether valid after real scrutiny or not- since these lead to an edge on the competition rather than blindly following what everyone else agrees should work. Every single standout hedge fund or trading firm was a leader rather than a follower in pushing the edges of what many thought was possible- most in the face of criticism from Nobel Prize winners such as Samuelson and Fama etc.

best

david

.

Hi David,

I like your general idea of looking at the volatility of acceleration (either sd of acc or MAE of acc) and the associated “leading” role of the new risk indicator. But after I carefully examined the idea from frequency domain I have some further questions.

Let us use the continuous time df/dt to simply the discussion. Basically, df/dt would translate to jw*F(w). In fact the acceleration here can be viewed as -w^2*F(w) which is the original signal passed through a high pass filter. There can be many different high pass filters to use and how could we decide which characteristics are desired in estimating the uncertainty/risk? Maybe it could be the derivative of acceleration or somewhere between the acceleration and velocity.

The VOA results look good but there might be something deeper than second order differences to reveal the uncertainties.

Hi jacky, thank you. I agree that there are a wide variety of different filters that can be used. I plan to present some alternative methods- but I haven’t fully explored many of the possibilities yet.

best

david

As always some interesting ideas David. Do you think that looking further into acceleration (the jerk and the jounce) be too volatile to be of use? I tried a few data series and a cursory look and these (the derivative of acceleration and second derivative) seem quite noisy.

Hi Larry, thanks- hope you are doing well. I agree that with this specific method and with cubing (ie raised to the third power) the indicator is quite noisy and only appropriate for futures trading or short-term trading of the SPY. There are a few ways to rectify that by using either smoothing, different transforms on the filter, or different filters altogether. Hopefully the general idea should inspire some creative construction of those methods.

best

david

Hi David, could you please detail a little bit how position sizing take into account volatility measures in your example ? I guess position is reduced when volatility increase but I’d appreciate your confirmation – Florent